Hi! You are receiving this e-mail because at one point you've subscribed to Weekly Robotics. I'm Mat Sadowski, and I've been running this newsletter since 2018. In June last year, I took a sabbatical from the newsletter, planning to finish Baldur's Gate 3 (that still didn't happen) and then getting back to it. In Q4 2024, my daughter was born and to say things were dynamic is an understatement.

If you are still a subscriber after these months, thanks for your patience, and let's jump back into the exciting world of robotics. It seems a lot has happened since I was gone!

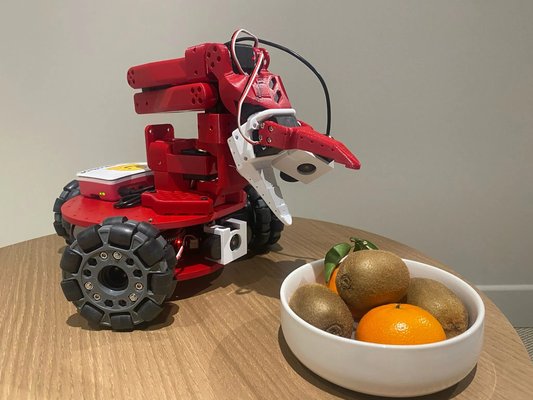

🤗 LeRobot: Making AI for Robotics more accessible with end-to-end learning

Hugging Face developed this machine learning library, and I’m seeing it more and more on social media. After the repo description:

🤗 LeRobot aims to provide models, datasets, and tools for real-world robotics in PyTorch. The goal is to lower the barrier to entry to robotics so that everyone can contribute and benefit from sharing datasets and pretrained models.

I’m quite excited that this library promotes open-source robots such as SO-100, and LeKiwi, and I can’t wait to get some spare time to experiment with this framework.

Just How Many Robots Can One Person Control at Once?

Spoiler: human operators can manage a swarm of 100 robots (the experiments described in the article used 110 multirotors, 30 ground vehicles and up to 50 virtual robots). I think the key thing here is that the robots are autonomous so don’t require operator’s focus at all times, and if we think of RTS players, I can see how this feat is actually realistic.

ELEGNT: Expressive and Functional Movement Design for Non-Anthropomorphic Robot

Researchers at Apple created a Pixar-inspired robot lamp, with some great results. Have a look at the video embedded on the page for the ideas of how truly expressive this robot can get. The music playing scenario really got to me. For the paper describing this work, please check out arXiv.

Helix: A Vision-Language-Action Model for Generalist Humanoid Control

Two weeks ago, Figure demonstrated their Vision-Language-Action (VLA) model that is said to unify perception, language understanding and control. The idea of using a single neural network for all this work, and providing 200Hz upper body control, sounds fascinating! I’m excited to see how Figure will keep developing this system.

Online Kalman Filter Tutorial

Are you sometimes sitting there thinking: “I should read up on how Kalman Filter works again. It’s been too long”. If that’s the case, have a look at this tutorial by Alex Becker. I think this is the most detailed tutorial on this filter I came across since I started publishing this newsletter close to 7 years ago.

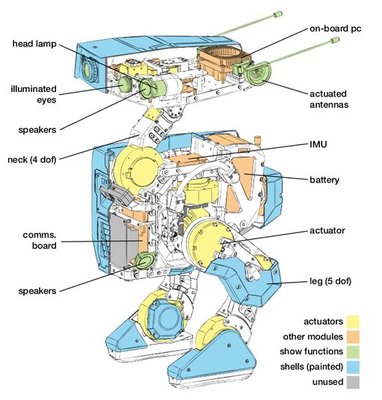

Design and Control of a Bipedal Robotic Character (2024)

This paper caught my attention just after I went on my sabbatical, and I thought it will be a great feature for WR 2.0, so here we are! I recommend checking out the paper featured here, as it describes these Disney bipedal robots in quite a bit of detail; for example, you can learn that the authors gave up on ankle roll actuators, and instead rounded off the soles made of urethane foam to provide decent damping.

The paper describes the software architecture in detail as well. Reinforcement Learning was used to train control policies, and an animation engine is used to create ‘artistic’ style motion of these robots.

A Roboticist Visits FOSDEM 2025

Earlier this year, I’ve helped organize the robotics devroom at FOSDEM 2025. We had a fantastic turn around and some very interesting talks. In the blog post I described my experience, and I’m providing links to some talks that you might find appealing. The team is set on doing this next year, so please feel free to join us in Brussels!

Events

- ProMat 2025: Mar 17 - Mar 20, 2025. Chicago, Illinois, United States of America

- European Robotics Forum 2025: Mar 25 - Mar 27, 2025. Stuttgart, Germany

- RoboSoft 2025: Apr 23 - Apr 26, 2025. Lausanne, Switzerland

- Farm Robotics Challenge: Apr 24, 2025 (ddl: Dec 12, 2024). Davis, California, United States of America

- Robotics Summit & Expo 2025: Apr 30 - May 01, 2025. Boston, Massachusetts, United States of America

- Automate 2025: May 12 - May 15, 2025. Detroit, Michigan, United States of America

- ICUAS (UAVs) 2025: May 14 - May 17, 2025. Charlotte, North Carolina, United States of America

- Xponential 2025: May 19 - May 22, 2025. Houston, Texas, United States of America

- ICRA 2025: May 19 - May 23, 2025. Atlanta, Georgia, United States of America

- Eurobot Open 2025: May 28 - May 31, 2025. La Roche-sur-Yon, France

For more robotic events, check out our event page.