This issue is an ICRA2023 special. It differs from our usual format in that it features more products and is more opinionated. As usual, the publication of the week section is manned by Rodrigo. Last week’s most clicked link was the Tidybot, with 22.5% opens. Header image source: ©IEEE ICRA 2023.

Open Navigation LLC Launches to Support ROS 2 and Nav2 Communities! [Sponsored]

Open Navigation LLC has launched to support, develop, and maintain Nav2 and the mobile robotics ecosystem in ROS. As many of you know, Steve Macenski left Samsung Research in March, which left things in an uncertain place. Well, he's back now with a plan to make Nav2 and the ROS community stronger than ever. Open Navigation needs your help however to make it happen!

(Boston Dynamics) AI Institute

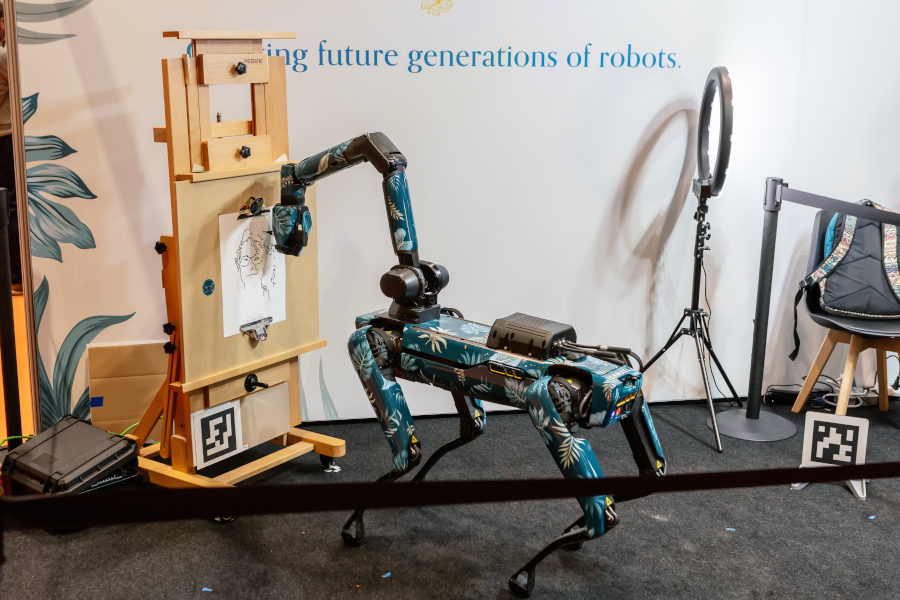

Boston Dynamics Spot as an artist. Image: ©IEEE ICRA 2023.

The AI Institute was quite present during the show. In their booth, you could find two spots with an interesting livery taking a picture of their models and then inking them on a piece of paper, drawing (pun intended) quite a crowd. During his keynote, Marc Raibert said he would like the AI Institute to be “the Bell Labs of Robotics”. One super exciting takeaway I took from Marc’s talk was the spot_ros2 driver developed by the Institute. I can’t feel anything but the excitement for the initiative, and I can’t wait to see what comes out in the next ten years.

Open-source simulation for robotics with O3DE

During the event, I came across Robotec, a company working on open-source simulation. The article shows how they created a ROS 2 gem for the Open 3D Engine. I was unaware of this work before ICRA, so I am sharing.

Sony’s Haptic Demo

Having worked with a Novint Falcon way in the past (a device that was way ahead of its time, if you ask me), it was exciting to find so many engaging haptic interfaces on the exhibition floor. One was Sony’s integration of Touch X. In their demo, you would see three cubes with different stiffness on a 3D eye-tracking display, and as you were touching them using the stylus, you would feel the reaction force pushing back.

Avatar Savoir Vivre

Two systems from ANA AVATAR XPRIZE: Nimbro (left) and i-botics (right).

An interesting thought occurred to me while visiting one of the stands. Humans treat robots as objects and don’t worry about their physical boundaries (as there are none?). This can be tricky for avatar interfaces that provide haptic feedback to the user. Your handshake to a humanoid robot will translate the forces directly to the user, which they might not appreciate. There were a handful of organizations present at ICRA that were showcasing their solutions to ANA AVATAR XPRIZE, two of them with haptic interfaces: i-botics (right) and Nimbro (left).

Direct Visual LiDAR Calibration

During one of the poster sessions, I caught this paper by Researchers from the National Institute of Advanced Industrial Science and Technology in Japan. The package allows targetless extrinsic camera-lidar calibration that can be performed on many LiDARs and cameras. For more information about this project, check out the project video or the paper.

Ready to Operate

Da Vinci system on the exhibition floor.

You could test the Da Vinci surgical robot in Intuitive’s booth. As you sat down in front of the console, you would place your middle finger and thumb on the pinching mechanisms, and now as you move your hands, the robot actuators react appropriately, and you see the positions of your end-effectors in the visor. In the demo, you could manipulate small rubber bands in an environment made of polymers. I had never seen one of these systems live before, and I was surprised by how easy and intuitive it was to operate. Here is a video I have taken showing the demonstrator.

Publication of the Week - Distributed Data-Driven Predictive Control for Multi-Agent Collaborative Legged Locomotion (2023)

Winner of outstanding paper of ICRA 2023, this paper presents a data-driven planner for multi-agent quadrupedal robots. The combined dynamics of the quadrupedal agents create an increasingly sophisticated system, thus the authors used the data-driven predictive control (DDPC) approach. The data is collected using RaiSim as the simulator for the multi-agent collaboration with up to 5 robots. The DDPC was tested on three quadrupeds linked by ball joints, forcing them to work together. This video shows the robot’s robustness in walking over unstructured wooden blocks.